Howdy, Stranger!

It looks like you're new here. If you want to get involved, click one of these buttons!

Categories

YL2 Update May

We hope you've had a great May!

This month feels like we bumped into all sorts of different unexpected obstacles that required time and effort to solve. In last month's update when we mentioned the "seamless tech" we were working on, we thought it was more or less complete - all we needed was to assemble some content to try it out. Well, as it turned out, there was a bit more to it than we anticipated...

In case you don't know, essentially what this "seamless tech" is all about is eliminating seams* between different parts of the body. The head, hands and feet in YL2 will be possible to choose from an ever increasing selection of different models that you can combine in any way you like. For example, we won't just be providing one type of hand, but several different ones, from different species, of different shape and with different skeletons, and more will added with time. Likewise with the heads, they too will come from different species etc. However, as you might imagine, having separate models in this fashion inevitably creates visible seams where they connect with the body. This is a huge problem that what we've been trying to solve.

(* Seams between parts, not UV seams which is a completely different problem.)

This problem comes with several different challenges. The first one, or the most basic one, is perhaps the problem of actually making the meshes themselves (the models) look like a single one. This can be accomplished by making them appear to be "welded" together, and this is something we already showed off in a previous update.

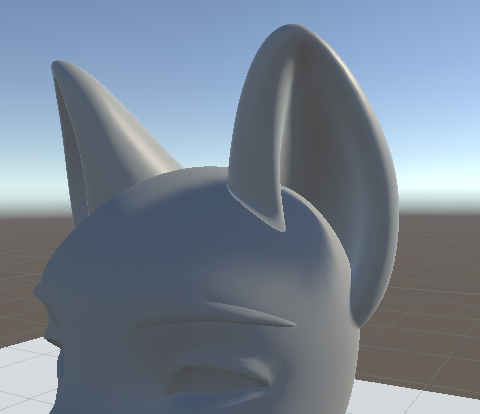

(Making mesh seamless in neck area.)

Another problem is how skeletons are supposed to be merged together in such a way that all the different parts are water-tight regardless of how the bones are deforming the mesh. Furthermore, we wanted to have flexibility in how we can author skeletons to allow parts to have different numbers of fingers or just have completely different shapes (for example, the feet of a crow is completely different from those of a wolf). This, too, is something we've already created tech for previously (although we will probably need to tweak it a little bit once we get to creating more versatile content for it).

Lastly, we need to make the maps (textures) appear seamless where these different models connect, because even if the meshes appear seamless, the maps can still give them away. This is a difficult problem to solve due to the complex setup of all the possible combinations of models. It would be a nightmare to manually make each map fit with the other, so we wanted to come up with a tech that allows us to bake maps for each model individually while still having perfect seamless results. And this is what we've been working on this past time when we've been talking about the "seamless tech".

To solve this map problem, we need several different components working together. The content (mesh) has to be authored in a very specific way and then rendered using a custom shader. Or at least, those were the two components we thought would be sufficient to solve it. For these two things - the content and shader - we had an elaborate plan how they could be implemented, and we followed this plan meticulously. But once we actually had implemented the shader and created all the content and started testing, it didn't work! Or rather, it would have worked, if it wasn't for the fact that we had forgotten to consider a very important trait of how normal maps operate - you cannot blend normal maps across different tangent spaces! This was an utter failure on our part, forgetting to include such an important property into our plans, and so is where we encountered our first big obstacle for the month. And from this point onward, it felt a bit like this gif from the "Malcom in the Middle" show, as we had to spend time implementing several systems we hadn't originally planned for:

The first step to solving this map problem was figuring out a way to blend normal maps across different tangent spaces. In short, the way we ended up doing it was by feeding the shader with additional custom data that we baked into the mesh, so it would have enough to parse each normal map properly. This did however come with some other consequences, as it would mean we would have to feed this data each time the mesh is changed (for example, when blend shapes are applied), meaning we had to come up with a way to calculate the tangent spaces in a fast and efficient manner.

As with the mesh normals that we've talked about in previous updates, we ended up implementing a GPU powered tangent space calculator. Normally, recalculating the tangent spaces would take undesirable amount of time on the CPU, but by creating a GPU implementation, the results are near instant. Naturally, it did take some time to implement this system though.

Ok, so now that we are feeding the shader with this additional data with the help of the custom tangent space GPU calculator, surely we have enough to make this work? WRONG!

Well, wrong for characters meshes at least. For regular meshes, it would be sufficient. But characters are of a special type of mesh, called a skinned mesh, that does additional processing before rendering it, namely deforming the mesh according to the location and orientation of the bones. This process is known as "skinning". Unity provides a "Skinned Mesh Renderer" which automates this process. During the skinning process, not only are the vertices moved, but also the normals and tangents of the mesh are deformed as well. Since we are feeding our shader with additional mesh-specific data, we needed that data to be skinned too, but unfortunately, Unity's skinned mesh renderer doesn't support skinning of custom data. So in order to solve this problem, we ended up writing our own custom skinned mesh renderer, that skins all the mesh data - both the basic one and our own - and feeds it into the shader.

So yeah, in pursuit of trying to solve the seam problem, we ended up on a journey, not only implementing a GPU tangent space calculator, but also a custom skinned mesh renderer. It took time, effort and energy, but finally we made it to the finish line at last.

Seamless tech

Here's the result of our research.

https://gyazo.com/86209fcf2010986308ab2f00bca72162

(Seamless tech on)

https://gyazo.com/4c7c7638a131f0669b9b46e2877ac84b

(Seamless tech off - for comparison)

As you can see, the seamless tech makes a huge difference where the hand connects to the body. Other areas of the body - where the head connects to the neck and where the feet connect to the legs - will receive the same treatment.

We're super excited about this tech, as it puts us a step closer to making the characters look like actual, real models rather than something that was just assembled in a character editor.

YL2 genitalia options

One fan by the nickname "Accenture" posted some suggestions in the forum regarding genital options for the character editor in YL2, which you can read about here. Essentially, "Accenture" suggested that characters should be possible to configure to have no genitalia, and that balls should be possible to toggle on or off as well on any model.

In our original model, the balls were a forced part of the character mesh and wouldn't allow for such customization, but upon reading this feedback, we reevaluated our plans and took measures to accommodate these suggestions. The balls have now been moved out of the character mesh, and exist as a separate model. We like this idea, because it does make things a lot easier for use regarding shape authoring and transfer between models. Furthermore, it will also allow us to create many different kinds of shapes for the balls, improving customization and versatility, all while reducing complexity. We are so happy these suggestions were voiced!

Since the balls are a separate model, it also means you'll be able to add them to any character, even those without shafts, or without any genitalia at all. (We haven't gotten to implementing a genderless model yet, but we intend to in time. A genderless model is far easier to model than one with genitalia.)

There are some drawbacks with this type of setup however. By having the balls as a separate model, there is inevitably a visible seam/clipping where the body and the balls intersect. While we do like the increased customizability and reduced complexity that comes with moving the balls to its own mesh, we weren't happy about this clipping issue. So this month we've been researching different methods of trying to mitigate this problem.

Screen space clipping relief

We have implemented a shader that operates in "screen space" to blend models together with eachother. It is our attempt at reducing clipping between intersecting models, for example balls clipping with the body they're attached to. Below are images of our research:

https://gyazo.com/149fc3f72bc124814eeb41d62f5682df

(Clipping relief on.)

https://gyazo.com/8099c36a33a800f1943472a2e44c64e8

(Clipping relief off.)

https://gyazo.com/8011db88f9ead7f2b72df270ba8f4558

(Toggling tech on and off.)

What's cool about this tech is that we could use it for other things too, such as ears on the head.

https://gyazo.com/afab21285dc85518627bdec8be90c783

https://gyazo.com/4873d46a0cb1c098bb6e9d06a34fc3cd

We also produced a variant of this shader that uses the G-buffer to blend the meshes. The benefit of using the G-buffer is that we can blend each channel individually. Here's a comparison of that technique vs the one above:

https://gyazo.com/7ae3e904c9aa4656874b561f31b0b62e

(G-buffer vs simple blending.)

As you can see, the G-buffer one does produce a more "correct" looking surface where the models intersect, due to the normals being blended separately in their own layer.

However, the G-Buffer version doesn't play so nicely with shadows:

Here's the simple blending for comparison:

The G-buffer also come with other drawbacks, namely that it is a bit hacky and requires much more custom code in order to operate properly. Maintenance is an issue here too, as new versions of Unity might break the shader. This is especially a concern as we intend to move over to the HD Rendering Pipeline of Unity at some point in the future, which most likely has a completely different buffer setup, and that might even change more over time as the HDRP is a WIP. Sometimes going with the easier solution makes more sense long term, and in this case, I think the easier solution might even look better.

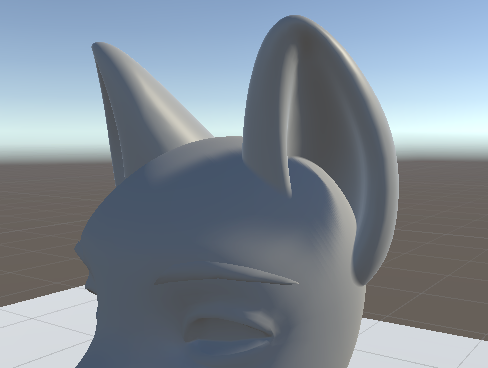

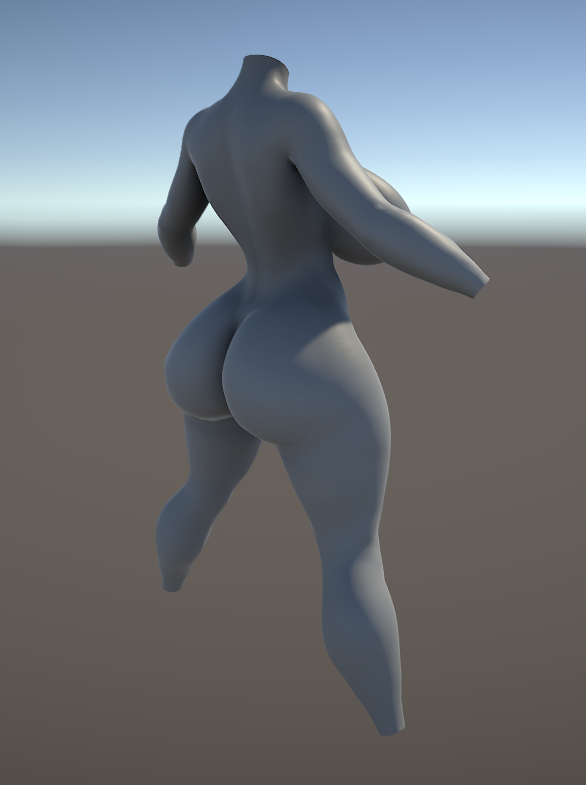

Sex Godess shape

While dogson has for the most part been busy working on content for the seamless tech, he has also been working on shapes on the side. Here's a "SexGodess" shape he made:

In YL2 we intend to create many different "body types" that you can select from. Each body type will be possible to tweak in terms of belly and breast inflation. You will also be able to mask and blend body types individually in the shape layering stack.

Summary

This month we ended up on a longer-than-expected journey in our pursuit of creating seamless characters for our character creator. What we thought would be an easy problem to solve turned out to be a fair bit more complex than anticipated. In the end we finally got what we wanted, but not without a tremendous amount of time and effort, having us implement several techs we originally had no idea would be required.

We've also made changes to our characters, separating the balls from being forcibly placed inside the male variants. This opens up for far more customizability while at the same time reducing complexity for us. It comes with some drawbacks however, as separating models in this fashion does produce seams between them, but we have been researching a screen space clipping relief tech that looks promising in mitigating this issue.

We are as excited as ever about the development. Things don't always go the way you think, as there are always potential obstacles lurking in the shadows. But we relish the challenge and are determined to reach our goals!

- odes

P.S.

I know I mentioned a couple of texts back that I was going to start writing shorter texts. Well, it seems like that hasn't really been the case so far, haha. It's just that there's so much to each problem that we encounter and to each solution that we implement, that unless explained, they wouldn't feel so exciting. So as long as I feel there's a need for these explanations, I'm afraid we're gonna be stuck with these long texts!

Comments

Just wondering if there are plans to support multiple dicks, for reptiles and the lot.

Also wondering if ears are part of the head types or independet.

Ears are independent, but with the help of our tech they blend in nicely (as you can see in the images above).

Inflation sliders will still be sliders, but you will choose from different pre-authored body types for the actual body. You can however blend several body type together using a mask in a system we call shape layers. Each layer has its own referenced body type, influence and mask (among other settings). Our technology is so adaptable I'm fairly certain we'll even be able to support completely custom made body types at some point (that are made in external application and then imported). That would be a later addition though.

Our character creator will more resemble a 3D authoring tool than a game-esque character creator.

You can read more about the idea behind body types and why we choose to go in that direction in this update.

It's not out of the question, it just requires some work. Right we don't have the time though as we're working on YL2. When/if things calm down a bit, we may create a maintenance release. Add it to the suggestion thread if it hasn't been brought up already:

http://forum.yiffalicious.com/discussion/1298/maintenance-release-additions-fixes

Hello Yiffalicious Team !

I Love you guys I genuinely do and I think youre pioneers of the genre

of VR Erotica. Ever since Ive got my Oculus I have tried out a whole slew of different games and nothing comes quite close to what Yiffalicious has to offer and I cant wait to see what Yiffalicious has on the horizon.

Suggestion or rather question; I played another 3D VR experience called Virt-a-Mate (VaM), and overall it was a very clunky experience and more frustrating than anything but i had alot of interesting mechanics I was curious if Yiff might incorperate some day.

-VaM allows you to posess the model of your choosing in an interaction's hands and grab your virtual partner and move them around to a degree.

This allows blowjobs to feel more interactive and to feel like youre actually pulling your partner in and away from you and through all Vams clunk it was actually a breathtaking experience. (I love your work and I feel like you could really make this work right)

-They had the ability to use an actual internet browser on a TV on the wall in the VR Space and the game came with an ingame VR keyboard so you never had to leave the experience. It worked pretty seamless though from afar was hard to see clearly.

I feel like anything you do on the matter you could do no wrong,

again excited about YL2 and wish you all the best Love you guys

Definitely. A pose/simulation mode is something we've had in mind to include in the character creator. Hopefully we'll be able to implement it for the first release, but if not, then we'll implement it later.

Hey I posted last month not sure if you were busy

just wanted to know if VR positioning of your virtual partner

will be possible. I saw the concept first in a Ero-VR Game

called VaM VirtaMate and I think Yiffalicious would do it better

than anyone

anyways ... was just gona say ... it seems like you guys post the news 15 days (mid month ) here .... because I can't wait to read the June's update xD !

Every month in the project update it's almost the same:

- We want to achive this.

- Looking for existing tech on the Internet, unity store, etc

- Nothing works well for us.

- #### it, let's do it ourselves.

- Profit?

As far as I know, the tech that Odes creates is not intended to be standalone components that can be sold in the Unity store, and with the development of YL2 nearing the first real release, I don't think he has the time to do it.Not currently planned but we may implement some sort of direct interaction mode at some point. Interaction editor will be implemented first though.

@immeasurability

We use a custom GPU implementation for our smoothing, but the algorithm is very basic and simple to implement:

https://en.wikipedia.org/wiki/Laplacian_smoothing

In pseudo-code, it would be like this:

Important to note that the result has to be stored in separate array in order to prevent it from being corrupted by the operation.

Also, since meshes are split along UV seams, there may be duplicate vertices in a realtime mesh, meaning you need to handle that as well when finding adjacent vertices.

The unify wiki has a basic implementation:

http://wiki.unity3d.com/index.php?title=MeshSmoother

@Horsie

Actually, most of our code has been written in a very decoupled way, so our solutions could theoretically be sold in the asset store as separate assets, and it's even something I've considered. We've implemented so many different generic systems that have great value outside of YL2, that I'm sure we could profit from it in more ways than in the app.

However, selling on the asset store comes with responsibilities too. It's not as simple as just uploading your asset there and hauling in the money. You need to be available and provide support for people experiencing issues, and you have to be present in the unity forums answering questions. As you said, it's not something I have time to do right now (or rather I'd much rather spend time working on YL2).

but what this technology: https://i.gyazo.com/149fc3f72bc124814eeb41d62f5682df.gif

what need search for this way?

this as soft particles but for standart shader, and work inverse for two meshes!